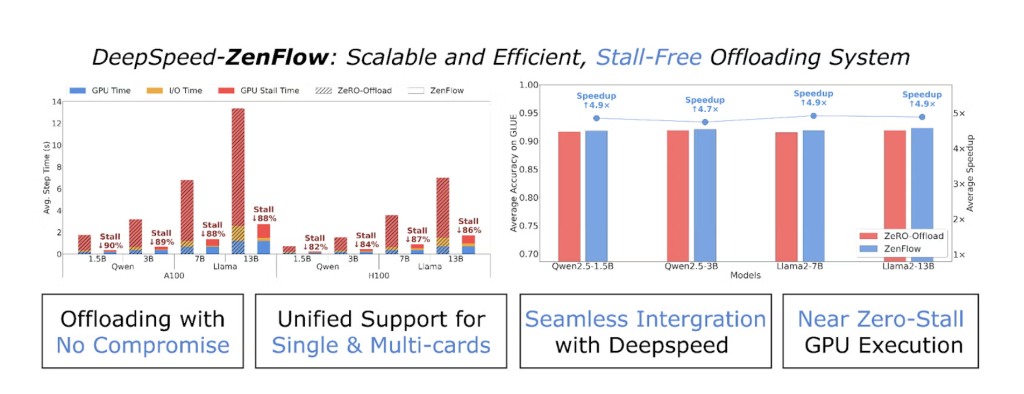

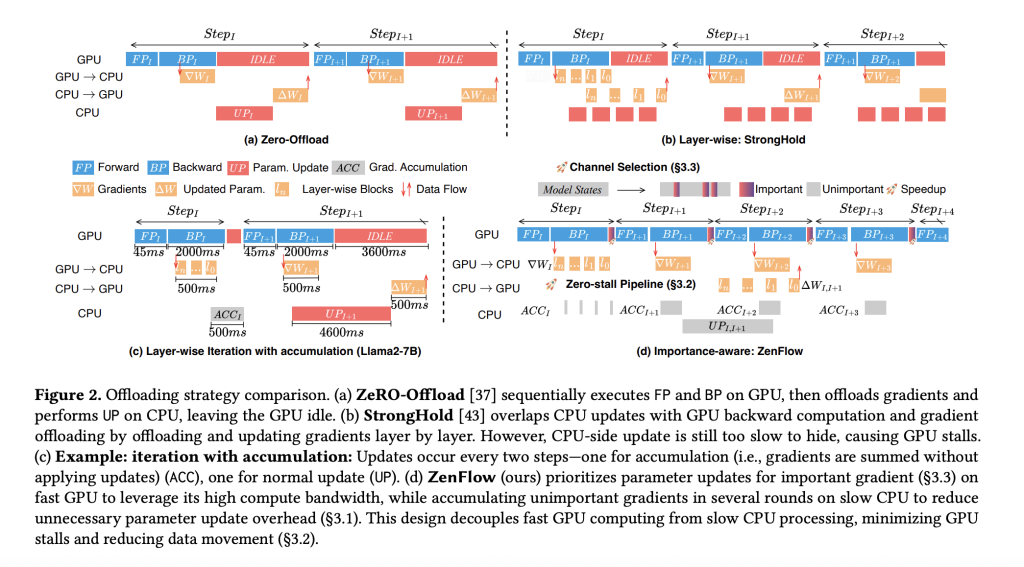

The DeepSpeed team unveiled ZenFlow, a new offloading engine designed to overcome a major bottleneck in large language model (LLM) training: CPU-induced GPU stalls. While offloading optimizers and gradients to CPU memory reduces GPU memory pressure, traditional frameworks like ZeRO-Offload and ZeRO-Infinity often leave expensive GPUs idle for most of each training step—waiting on slow CPU updates and PCIe transfers. For example, fine-tuning Llama 2-7B on 4× A100 GPUs with full offloading can balloon step time from 0.5s to over 7s, a 14× slowdown. ZenFlow eliminates these stalls by decoupling GPU and CPU computation with importance-aware pipelining, delivering up to 5× end-to-end speedup over ZeRO-Offload and reducing GPU stalls by more than 85%.

How ZenFlow Works

- Importance-Aware Gradient Updates: ZenFlow prioritizes the top-k most impactful gradients for immediate GPU updates, while deferring less important gradients to asynchronous CPU-side accumulation. This reduces per-step gradient traffic by nearly 50% and PCIe bandwidth pressure by about 2× compared to ZeRO-Offload.

- Bounded-Asynchronous CPU Accumulation: Non-critical gradients are batched and updated asynchronously on the CPU, hiding CPU work behind GPU compute. This ensures GPUs are always busy, avoiding stalls and maximizing hardware utilization.

- Lightweight Gradient Selection: ZenFlow replaces full gradient AllGather with a lightweight, per-column gradient norm proxy, reducing communication volume by over 4,000× with minimal impact on accuracy. This enables efficient scaling across multi-GPU clusters.

- Zero Code Changes, Minimal Configuration: ZenFlow is built into DeepSpeed and requires only minor JSON configuration changes. Users set parameters like

topk_ratio(e.g., 0.05 for top 5% of gradients) and enable adaptive strategies withselect_strategy,select_interval, andupdate_intervalset to"auto". - Auto-Tuned Performance: The engine adapts update intervals at runtime, eliminating the need for manual tuning and ensuring maximum efficiency as training dynamics evolve.

Performance Highlights

| Feature | Impact |

|---|---|

| Up to 5× end-to-end speedup | Faster convergence, lower costs |

| >85% reduction in GPU stalls | Higher GPU utilization |

| ≈2× lower PCIe traffic | Less cluster bandwidth pressure |

| No accuracy loss on GLUE benchmarks | Maintains model quality |

| Lightweight gradient selection | Scales efficiently to multi-GPU clusters |

| Auto-tuning | No manual parameter tuning required |

Practical Usage

Integration: ZenFlow is a drop-in extension for DeepSpeed’s ZeRO-Offload. No code changes are needed; only configuration updates in the DeepSpeed JSON file are required.

Example Use Case: The DeepSpeedExamples repository includes a ZenFlow finetuning example on the GLUE benchmark. Users can run this with a simple script (bash finetune_gpt_glue.sh), following setup and configuration instructions in the repo’s README. The example demonstrates CPU optimizer offload with ZenFlow asynchronous updates, providing a practical starting point for experimentation.

Configuration Example:

"zero_optimization": {

"stage": 2,

"offload_optimizer": {

"device": "cpu",

"pin_memory": true

},

"zenflow": {

"topk_ratio": 0.05,

"select_strategy": "auto",

"select_interval": "auto",

"update_interval": 4,

"full_warm_up_rounds": 0,

"overlap_step": true

}

}

Getting Started: Refer to the DeepSpeed-ZenFlow finetuning example and the official tutorial for step-by-step guidance.

Summary

ZenFlow is a significant leap forward for anyone training or fine-tuning large language models on limited GPU resources. By effectively eliminating CPU-induced GPU stalls, it unlocks higher throughput and lower total cost of training, without sacrificing model accuracy. The approach is particularly valuable for organizations scaling LLM workloads across heterogeneous hardware or seeking to maximize GPU utilization in cloud or on-prem clusters.

For technical teams, the combination of automatic tuning, minimal configuration, and seamless integration with DeepSpeed makes ZenFlow both accessible and powerful. The provided examples and documentation lower the barrier to adoption, enabling rapid experimentation and deployment.

ZenFlow redefines offloading for LLM training, delivering stall-free, high-throughput fine-tuning with minimal configuration overhead—a must-try for anyone pushing the boundaries of large-scale AI.

Check out the Technical Paper, GitHub Page and Blog. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.