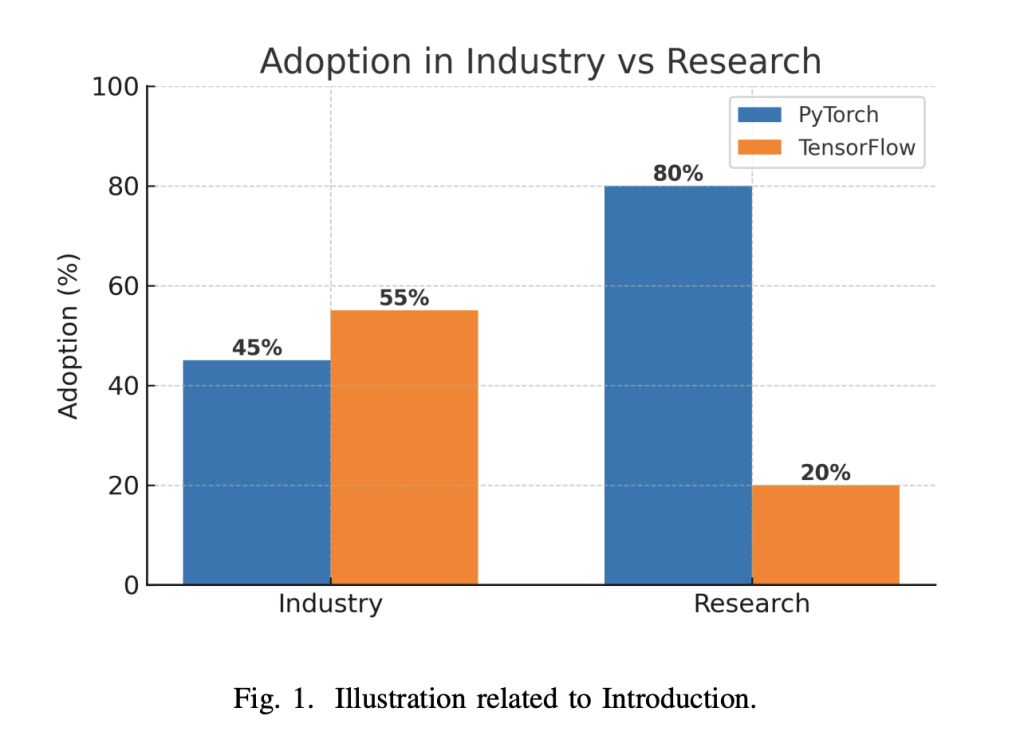

The choice between PyTorch and TensorFlow remains one of the most debated decisions in AI development. Both frameworks have evolved dramatically since their inception, converging in some areas while maintaining distinct strengths. This article explores the latest patterns from the comprehensive survey paper from Alfaisal University, Saudi Arabia, synthesizing usability, performance, deployment, and ecosystem considerations to guide practitioners in 2025.

Philosophy & Developer Experience

PyTorch burst onto the scene with a dynamic (define-by-run) paradigm, making model development feel like regular Python programming. Researchers embraced this immediacy: debugging is straightforward, and models can be altered on the fly. PyTorch’s architecture—centered around torch.nn.Module—encourages modular, object-oriented design. Training loops are explicit and flexible, giving full control over every step, which is ideal for experimentation and custom architectures.

TensorFlow, initially a static (define-and-run) framework, pivoted with TensorFlow 2.x to embrace eager execution by default. The Keras high-level API, now deeply integrated, simplifies many standard workflows. Users can define models using tf.keras.Model and leverage one-liners like model.fit() for training, reducing boilerplate for common tasks. However, highly custom training procedures may require dropping back to TensorFlow’s lower-level APIs, which can add complexity in PyTorch is often easier due to Pythonic tracebacks and the ability to use standard Python tools. TensorFlow’s errors, especially when using graph compilation (@tf.function), can be less transparent. Still, TensorFlow’s integration with tools like TensorBoard provides robust visualization and logging out of the box, which PyTorch has also adopted via SummaryWriter.

Performance: Training, Inference, & Memory

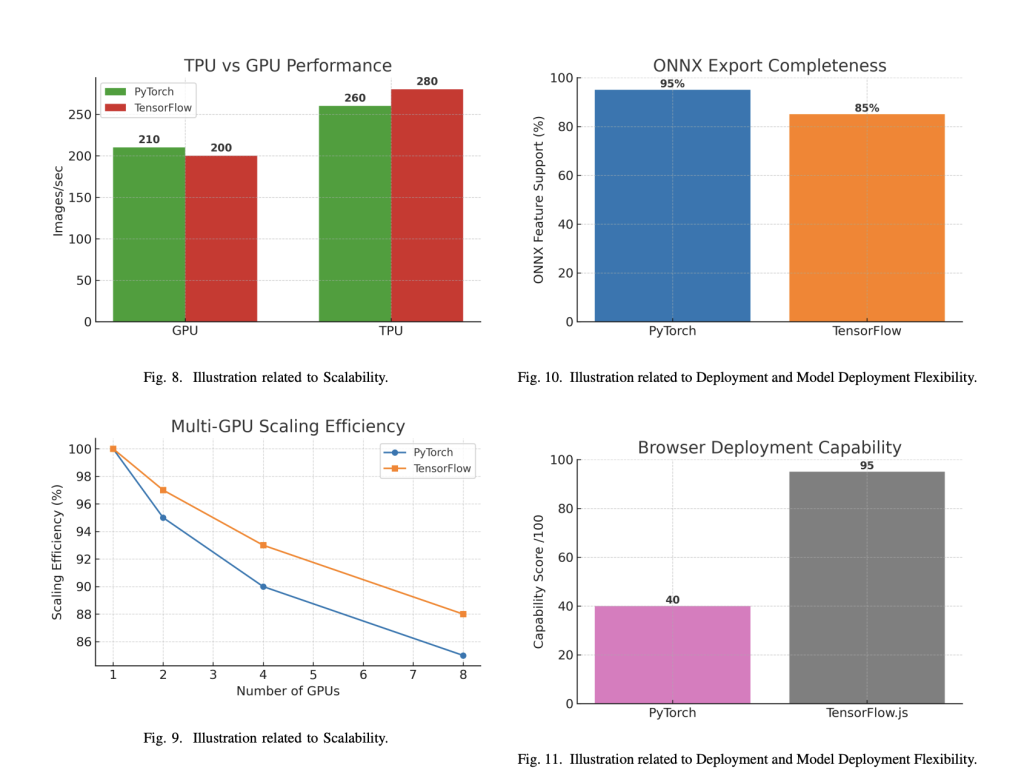

Training Throughput: Benchmark results are nuanced. PyTorch often trains faster on larger datasets and models, thanks to efficient memory management and optimized CUDA backends. For example, in experiments by Novac et al. (2022), PyTorch completed a CNN training run 25% faster than TensorFlow, with consistently quicker per-epoch times. On very small inputs, TensorFlow sometimes has an edge due to lower overhead, but PyTorch pulls ahead as input size grows[attached_filence Latency**: For small-batch inference, PyTorch frequently delivers lower latency—up to 3× faster than TensorFlow (Keras) in some image classification tasks (Bečirović et al., 2025)[attached_filege diminishes with larger inputs, where both frameworks are more comparable. TensorFlow’s static graph optimization historically gave it a deployment edge, but PyTorch’s TorchScript and ONNX support have closed much of this gap[attached_file Usage**: PyTorch’s memory allocator is praised for handling large tensors and dynamic architectures gracefully, while TensorFlow’s default behavior of pre-allocating GPU memory can lead to fragmentation in multi-process environments. Fine-grained memory control is possible in TensorFlow, but PyTorch’s approach is generally more flexible for research workloads: Both frameworks now support distributed training effectively. TensorFlow retains a slight lead in TPU integration and large-scale deployments, but PyTorch’s Distributed Data Parallel (DDP) scales efficiently across GPUs and nodes. For most practitioners, the scalability gap has narrowed significantly.

Deployment: From Research to Production

TensorFlow offers a mature, end-to-end deployment ecosystem:

- Mobile/Embedded: TensorFlow Lite (and Lite Micro) leads for on-device inference, with robust quantization and hardware acceleration.

- Web: TensorFlow.js enables training and inference directly in browsers.

- Server: TensorFlow Serving provides optimized, versioned model deployment.

- Edge: TensorFlow Lite Micro is the de facto standard for microcontroller-scale ML (TinyML)

- Mobile: PyTorch Mobile supports Android/iOS, though with a larger runtime footprint than TFLite.

- Server: TorchServe, developed with AWS, provides scalable model serving.

- Cross-Platform: ONNX export allows PyTorch models to run in diverse environments via ONNX Runtime.

Interoperability is increasingly important. Both frameworks support ONNX, enabling model exchange. Keras 3.0 now supports multiple backends (TensorFlow, JAX, PyTorch), further blurring the lines between ecosystems & Community

PyTorch dominates academic research, with approximately 80% of NeurIPS 2023 papers using PyTorch. Its ecosystem is modular, with many specialized community packages (e.g., Hugging Face Transformers for NLP, PyTorch Geometric for GNNs). The move to the Linux Foundation ensures broad governance and sustainability.

TensorFlow remains a powerhouse in industry, especially for production pipelines. Its ecosystem is more monolithic, with official libraries for vision (TF.Image, KerasCV), NLP (TensorFlow Text), and probabilistic programming (TensorFlow Probability). TensorFlow Hub and TFX streamline model sharing and MLOps: Stack Overflow’s 2023 survey showed TensorFlow slightly ahead in industry, while PyTorch leads in research. Both have massive, active communities, extensive learning resources, and annual developer conferences[attached_fileases & Industry Applications

Computer Vision: TensorFlow’s Object Detection API and KerasCV are widely used in production. PyTorch is favored for research (e.g., Meta’s Detectron2) and innovative architectures (GANs, Vision Transformers)[attached_file The rise of transformers has seen PyTorch surge ahead in research, with Hugging Face leading the charge. TensorFlow still powers large-scale systems like Google Translate, but PyTorch is the go-to for new model development.

Recommender Systems & Beyond: Meta’s DLRM (PyTorch) and Google’s RecNet (TensorFlow) exemplify framework preferences at scale. Both frameworks are used in reinforcement learning, robotics, and scientific computing, with PyTorch often chosen for flexibility and TensorFlow for production robustness.

Conclusion: Choosing the Right Tool

There is no universal “best” framework. The decision hinges on your context:

- PyTorch: Opt for research, rapid prototyping, and custom architectures. It excels in flexibility, ease of debugging, and is the community favorite for cutting-edge work.

- TensorFlow: Choose for production scalability, mobile/web deployment, and integrated MLOps. Its tooling and deployment options are unmatched for enterprise pipelines.

In 2025, the gap between PyTorch and TensorFlow continues to narrow. The frameworks are borrowing each other’s best ideas, and interoperability is improving. For most teams, the best choice is the one that aligns with your project’s requirements, team expertise, and deployment targets—not an abstract notion of technical superiority.

Both frameworks are here to stay, and the real winner is the AI community, which benefits from their competition and convergence.

Check out the Technical Paper Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Sajjad Ansari is a final year undergraduate from IIT Kharagpur. As a Tech enthusiast, he delves into the practical applications of AI with a focus on understanding the impact of AI technologies and their real-world implications. He aims to articulate complex AI concepts in a clear and accessible manner.