The way, financially, for a company to win the ChatBot game in a profitability sense is—probably—not to play. Cupertino’s AI contrarianism of betting on the device, not the cloud; and on the routinized use-case, not the wide-open ChatBot, is—probably—not just defensible as a bet, but a bet at favorable odds…

This at least looks, to me, relatively clear: Apple’s AI strategy is not a problem.

The company’s bet on on-device channeled intelligence, its focus on privacy and user experience—are all, I think, not just defensible but shrewd choices.

The trouble is execution. Siri is still a punchline. Apple’s AI features, when they appear, too often feel like demos or afterthoughts. It doesn’t just work. And what they have actually shipped, if it were to work, would lead to the reaction: why is this? magic that made the iPhone, AirPods, or Apple Pay category-defining. The major risk for Apple is not that it’s playing the wrong game, but that it is not playing hard enough. If Cupertino wants to cash in on its favorable-odds bets, it needs to kick its AI execution up not one, but two gears—fast. Otherwise, all the strategic discipline in the world will amount to little more than a footnote in a case study on missed inflection points.

Back when I was a wee’un, Michael Spence once said from the front of the classroom: “if this were a real business-school rather than a liberal arts class, we would now spend twice as much time on how a corporation would proceed to structure itself to execute this corporate strategy as we have on how a corporation should formulate it, but we are on the north side of the river, and we need to move this course on…” Smart words. Very smart man.

The very sharp (except for his beliefs that advertising-supported business models are good things in the Attention Info-Bio Tech Economy, and except for the belief that near-monopolies established by winning the game of advancing infotech should then be allowed to flourish largely free of governments’ cutting them back forever) Ben Thompson reviews Apple Computer’s “AI” strategy. He says smart things:

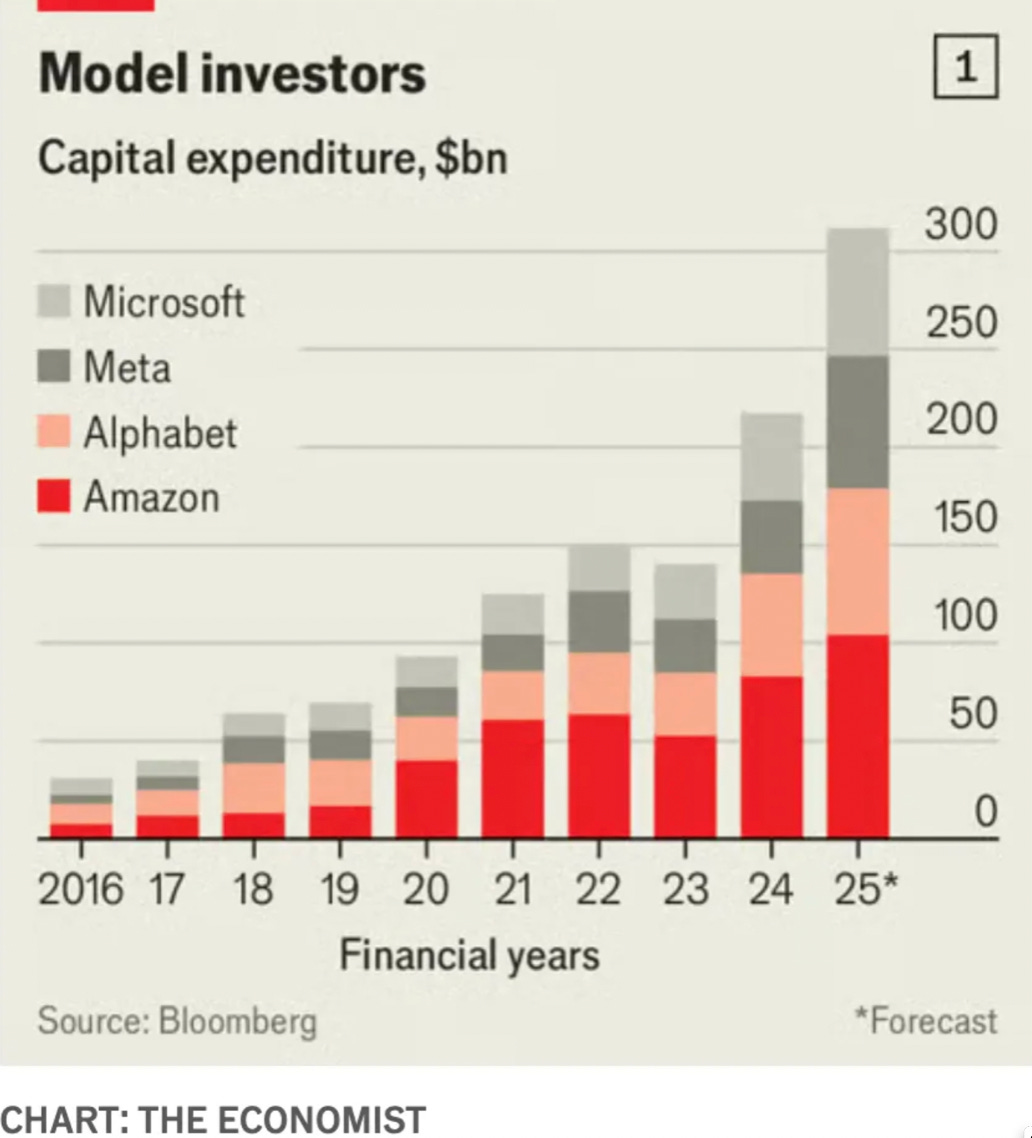

Ben Thompson: Cook’s AI Comments; Apple’s AI Strategy <https://stratechery.com/2025/apple-earnings-cooks-ai-comments-apples-ai-strategy-redux/>: ‘The first takeaway is how relatively tiny that [Apple total capital expenditure] number is compared to Apple’s Big Tech peers; $4 billion annualized CapEx is less than half of how much Google increased their CapEx on their earnings call for this fiscal year…. What is the roadmap?… From Cook’s opening remarks… “We see AI as one of the most profound technologies of our lifetime…. Apple has always been about taking the most advanced technologies and making them easy to use and accessible for everyone…. We’re integrating AI… in a way that is deeply personal, private, and seamless, right where users need them. We’ve already released more than 20 Apple Intelligence features, including visual intelligence, cleanup, and powerful Writing Tools. We’re making good progress on a more personalized Siri…. Apple Silicon is at the heart… [of] powerful Apple Intelligence features… run[ning] directly on device…. Our servers… deliver even greater capabilities while preserving user privacy through our Private Cloud Compute architecture. We believe our platforms offer the best way for users to experience the full potential of generative AI… right on their Mac, iPad, and iPhone…”.

Cases and content that Apple is uniquely positioned to provide and access… not is any sort of general purpose chatbot…. [but] focused on specific use cases… [in a] problem space that… is constrained and grounded… where it is much less likely that the AI screws up… a space… useful, that only they can address, and… “safe” in terms of reputation risk. Honestly, it almost seems unfair — or, to put it another way, it speaks to what a massive advantage there is for a trusted platform. Apple gets to solve real problems in meaningful ways with low risk, and that’s exactly what they are doing…

I think this gets it right.

-

Apple avoids paying the NVIDIA tax…

-

Apple also avoids paying inference-cloud bills to a large degree by putting a large share of Apple Intelligence on-device…

-

Apple doesn’t have to spend money competing in the ChatBot market…

-

And Apple makes two favorable-odds bets:

-

One bet is that on-device (with occasional reaching out to Apple private clould) low-latency privacy-secured actual doing of useful tasks will be something very valuable, and something where Apple can maintain at least parity with Android…

-

A second bet is that the other, “ChatBot”, side will be a case where the fact that people are using OpenAI’s or Google’s or FaceBook’s or Anthropic’s ChatBot (because Apple does not have one) will not really matter. As Thompson says lower down, “we will still need devices to access AI, and Apple is best at devices…”

-

The so-called “NVIDIA tax”—the premium all other platform oligopolies are now paying, and will continue to pay, as NVIDIA exercises its pricing power in a moment of panicked, must-have-now AI infrastructure demand—is, right now, a huge deal that Apple very much wants to avoid paying. The scale is enormous: NVIDIA’s gross margins have soared to the 70–75% range, a figure that would make even the most rapacious of monopolists blush. The A100 and H100 GPU chips—the backbone of large-scale AI inference and training—are selling for $25,000 to $40,000 apiece, and sometimes more in spot markets, with actual production costs a tiny fraction of that sum even as NVIDIA has to rely on TSMC which has to rely on ASML as essential sole suppliers. The result? Even the largest hyperscalers—Microsoft, Google, Amazon, Meta—are funnelling billions and tens of billions a year into NVIDIA’s coffers, both because Jensen Huang’s team has invented a better mousetrap, and because his team has has, by force of early vision and relentless execution, created a de facto standard and an ecosystem (CUDA) that locks in demand and raises switching costs to vertiginous heights.

The magnitude: NVIDIA’s data center revenue for the most recent fiscal year exceeded $50 billion, up from $15 billion just two years prior—a threefold increase, almost entirely attributable to AI demand. It is not as though TSMC has tripled its fab throughout at actually making NVIDIA’s chips, after all. The company’s market capitalization now rivals that of the oil majors at the peak of their powers. For every dollar spent on cloud AI compute, a substantial slice—estimates range from 20% to 40%—is captured as economic rent by NVIDIA, rather than being competed away or reinvested in broader innovation. Why? Because Google, Microsoft, and Meta believe they must burn tens of billions a year on cloud-based AI.

Apple, by contrast, has—so far—largely avoided this tax. By designing its own silicon (the M-series chips) and focusing on on-device inference, Apple sidesteps the need to rent vast fleets of NVIDIA-powered servers for AI workloads. Instead, it invests in its own private cloud infrastructure, powered by Apple Silicon, and keeps its capital expenditures at a fraction of the scale now required of its peers. This is not a detail. It is instead a huge bet that the locus of value in AI will, for its customers, remain at the on-device edge—not in the cloud.

The broader implication is clear: as long as the AI gold rush continues, and as long as CUDA remains the lingua franca of machine learning, NVIDIA’s pricing power will persist, and the tax will be levied on all who lack the will or the capability to defect. Apple’s escape from this regime is, I think, both remarkable and fragile. Should the center of gravity in AI shift decisively back to the cloud, or should NVIDIA’s lock on the ecosystem weaken, the calculus may change. But for now, the NVIDIA tax stands as a monument to the power of technological path dependence—and to the rewards of vertical integration, if one can afford it. And to the astonishing success of Apple Silicon as hardware.

Apple also avoids paying inference-cloud bills to a large degree by putting a large share of Apple Intelligence on-device—a strategic decision with both technical and economic resonance. Google, Microsoft, Meta, and Amazon have all built their AI offerings around cloud-based inference, which requires massive fleets of NVIDIA-powered GPUs—even for Google—humming away in data centers, racking up not only hardware costs but also ongoing operational expenses for power, cooling, and bandwidth. These costs balloon into the billions of dollars annually, with, given competition, no clear road to covering even their marginal costs.

Apple, by contrast, leverages its vertical integration and prowess in custom silicon to run as much AI as possible directly on devices—iPhones, iPads, Macs—using the M-series and A-series chips, with the marginal cost of inference is borne by the user’s own device invisibly in the monthly electric bill, not by Apple directly. By architecting Apple Intelligence to work “on the edge” Apple sidesteps the recurring cloud inference bills. It, too, is a favorable-odds bet that the future of AI for most consumers will, for both privacy and cost reasons, live primarily on their own devices.

Plus Apple doesn’t have to spend money competing in the ChatBot market, which feels to me like a repeat of the video-streaming wars. In those, every large media company took a huge amount of money and set it on fire, with YouTube and Netflix being the last ones standing and the only ones, I think, with the potential to be profitabe. Perhaps this is a windfall benefit from strategic discipline.

Perhaps this is luck.

In any event, there is a revealing contrast with its Big Tech peers. Consider the arms race underway among Google, Microsoft, FaceBook, Amazon, Open AI, and more: each is pouring billions and tens of billions into developing, training, and marketing ever-larger, ever-more-general-purpose ChatBots, all in the hope of capturing the next great platform shift in human-computer interaction. These efforts are not cheap. Training a state-of-the-art large language model (LLM) can cost hundreds of millions of dollars in compute alone, and that’s before you factor in the ongoing costs of inference, the armies of prompt engineers, the content moderation teams, and the relentless marketing spend to convince the world that your chatbot is the one to use.

Apple, by contrast, has chosen to sit this particular race out, while focusing its own engineering resources on tightly-scoped, privacy-preserving, on-device AI features that enhance the user experience without the reputational and financial risks of chatbot hallucinations.

This is a bet that the real value for Apple’s customers lies in seamless, reliable, and private AI augmentation, not in chasing the latest ChatBot hype cycle, in a world where the chatbot market is still searching for a sustainable business model.

Apple’s refusal to play is a bet, but at least for now it looks to me like a not-unshrewd bet.

There are really two bets. And I would not say right now that either is at unfavorable odds for Apple.

The first is that on-device, low-latency, privacy-secured doing of useful tasks—think: transcribing voice memos, classifying photos, summarizing messages, or even generating suggested replies—will turn out to be not just useful, but a key source of phone platform value. Gaining an edge here over Android because Google is chasing ChatBot ASI might well be a key differentiator. Maintaing parity with Android here would allow other differentiators to shine through. Here Apple is betting on its own silicon, its control of the hardware-software stack, and its reputation for privacy.

The iPhone, iPad, and Mac, running Apple’s M-series and A-series chips, are already capable of impressive feats of on-device inference, and the company is banking that users will prefer AI features that don’t require their data to be shipped off to some distant data center—especially in a world where privacy regulation and reputational risk are rising.

The second bet is that the “ChatBot” side of the AI market—where users interact with OpenAI’s GPT, Google’s Gemini, Meta’s Llama, or Anthropic’s Claude—will turn out to be a space where Apple’s absence will not matter much at all. Apple’s wager is that, as Ben Thompson puts it, “we will still need devices to access AI, and Apple is best at devices.” So long as the device remains the locus of user attention and agency, Apple can afford not to play, just as it decided not to play vis-à-vis Google search. Focusing its own resources on making the device experience seamless, private, and delightful might well be a better path than building for ChatBots what Safari is to Chrome, or what Google Maps is to Apple Maps.

Let others burn capital chasing the next shiny object, and then, if and when it matters, swoop in and integrate the best.

Apple’s AI playbook is refreshingly contrarian: say no to the chatbot arms race, double down on silicon, and let others light money on fire in the cloud. The “NVIDIA tax” is for other companies; Apple’s bet is that users want fast, private, on-device intelligence, not cloud-powered hallucinations.

But the problem isn’t the vision—it’s the follow-through. Apple needs to stop treating AI as a side project and start executing.

If reading this gets you Value Above Replacement, then become a free subscriber to this newsletter. And forward it! And if your VAR from this newsletter is in the three digits or more each year, please become a paid subscriber! I am trying to make you readers—and myself—smarter. Please tell me if I succeed, or how I fail…