Image Source: Dex Horthy on Twitter.

As generative AI moves from experimentation to enterprise-scale deployment, a quiet revolution is reshaping how we build and optimize intelligent systems.

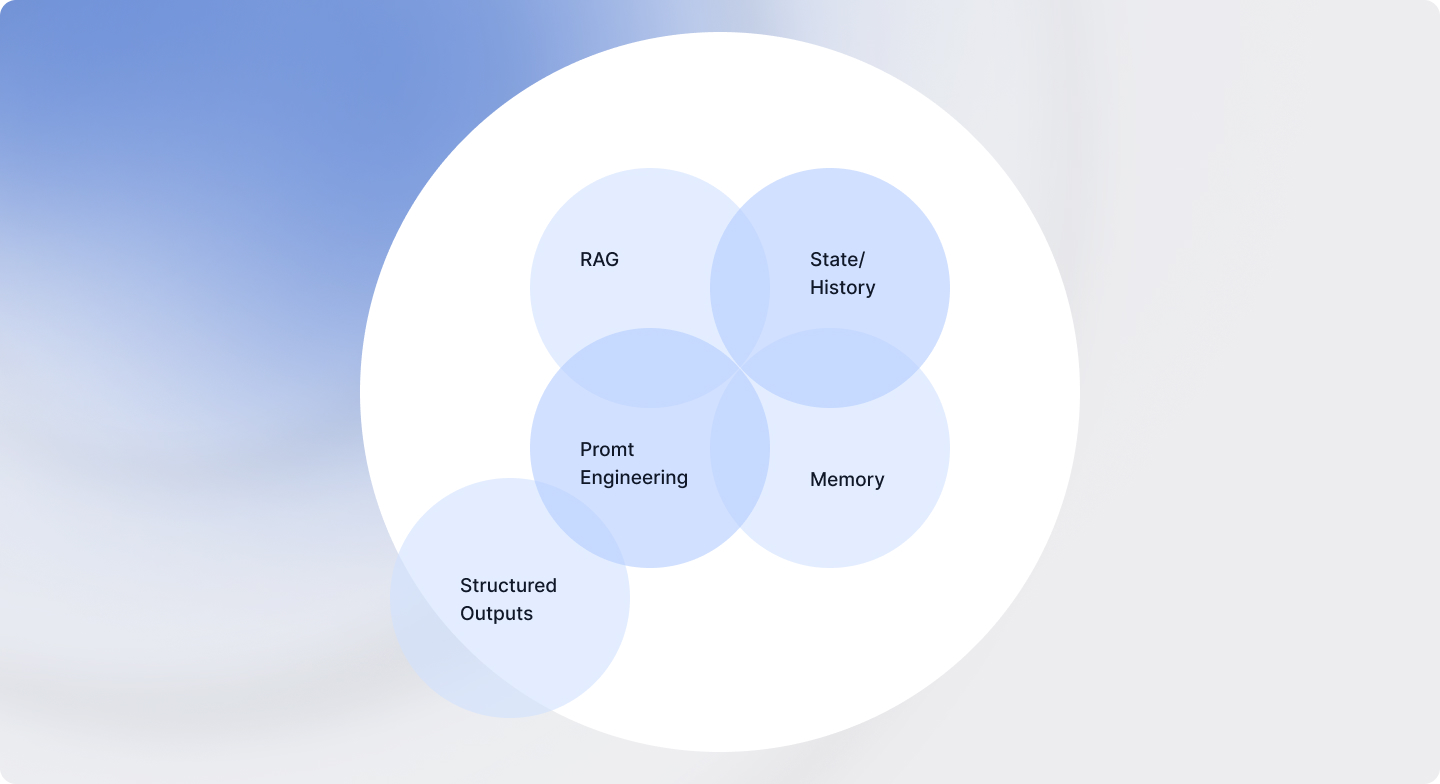

Until recently, much of the focus has been on prompt engineering—carefully crafting inputs to coax the right responses out of large language models. This approach has powered clever chatbots and impressive prototypes. But in practice, it’s fragile. Prompts are sensitive to exact phrasing, blind to past interactions, and ill-equipped to manage complexity over time.

A new paradigm is emerging: context engineering in AI or contextual AI.

Rather than tuning the input, context engineering focuses on shaping the environment in which AI operates—defining the memory, access to knowledge, role-based understanding, and business rules that guide behavior. It’s what allows AI to move beyond isolated tasks and become a reasoning participant in enterprise workflows.

This marks a critical shift in AI design: from optimizing individual exchanges to engineering systems that think, adapt, and evolve.

Prompt Engineering Versus Context Engineering in AI

From Isolated Inputs to Intelligent Ecosystems

To understand the significance of this evolution, it helps to zoom out.

Prompt engineering is inherently transactional. You craft a precise question, the model returns an answer, and the loop resets. While effective for single-turn tasks, this structure breaks down in real-world scenarios where context matters: customer service interactions that span multiple channels, employee workflows that depend on enterprise systems, or AI agents collaborating across roles.

Context engineering shifts us toward systems thinking.

Instead of optimizing a single prompt, we optimize the contextual framework—the user history, session data, domain knowledge, security controls, and intent signals that shape how an AI interprets each request. This enables more natural, fluid, and resilient AI behavior across multi-step journeys and dynamic conditions.

For example, imagine two employees asking the same AI agent about Q2 sales performance. With prompt engineering, the agent provides a static answer. With context engineering, the system knows one user is a regional sales lead and the other is a finance analyst—and tailors the response based on role, permissions, prior interactions, and relevant KPIs.

This is the foundation for truly intelligent AI systems—ones that not only generate answers, but understand the question in context.

Prompt Engineering Vs. Contextual AI Scope and Focus

Prompt engineering is inherently narrow—it focuses on crafting the perfect input to guide a model’s response in a single interaction. While tools like Prompt Studio can accelerate prompt experimentation, the major drawback of this approach is that there is no memory or broader understanding beyond the prompt itself.

Context engineering takes a wider view. It shifts attention from the individual input-output loop to the surrounding ecosystem: who the user is, what systems and data are relevant, what’s already been said or done, and what business rules should apply. Rather than optimizing a single response, it shapes the AI’s understanding across time and use cases.

This expanded scope transforms AI from a reactive tool into an informed participant—one that can reason over history, adjust to different roles, and act with consistency. It’s not just about better answers, but about creating systems that align with how people and organizations operate in the real world with persistent AI agent memory.

Handling Complexity

Real-world use cases don’t fit neatly into static interactions. They involve ambiguity, long histories, shifting priorities, and organizational nuance.

Prompt engineering simply isn’t built for that. It requires constant manual tuning and offers no mechanism for continuity. Context engineering addresses this gap by enabling AI to operate across time, channels, and teams—with a persistent understanding of both data and intent.

For enterprise applications, this is essential. Whether managing a customer issue, orchestrating a multi-system workflow, or enforcing compliance in decision-making, AI must interpret not just what was asked—but why, by whom, and under what constraints. That demands memory, rules, reasoning, and orchestration—all of which context engineering makes possible.

Contextual AI Adaptability and Scalability

As organizations shift from experimenting with GenAI to operationalizing AI agents within business processes, the need for adaptable, context-aware systems becomes clear. Prompt engineering alone does not scale. It’s a manual effort that assumes a static context and requires human intervention each time the scenario changes.

Context engineering, by contrast, introduces a more dynamic and sustainable approach. It enables AI systems to reason over structured and unstructured data, understand relationships between concepts, track interaction history, and even modify behavior based on evolving business objectives.

This shift also aligns with the broader movement toward agentic AI—systems that can plan, coordinate, and execute tasks autonomously. In this model, AI agents don’t just answer questions; they make decisions, trigger actions, and collaborate with other agents or systems. But this kind of intelligence only works if the agents are context-aware: if they know what happened before, what constraints apply now, and what outcomes are desired next.

Applying Context Engineering in Practice

Bringing context-aware AI to life inside an enterprise isn’t as simple as flipping a switch. It requires a deliberate shift in how AI systems are designed and deployed. At its core, this shift involves building agents that don’t just react, but understand. They must maintain continuity across sessions, track prior interactions, and respond to dynamic user needs in real time. This requires more than just intelligence—it demands memory, adaptability, and structure.

Imagine a customer service agent that not only answers queries but also recalls the user’s past issues, preferences, and even unresolved frustrations. It personalizes responses not because it was explicitly told to, but because it has context embedded in its design. Or consider an insurance claims workflow that adjusts based on who the customer is, what type of policy they hold, and their historical risk profile—automatically altering the process in real time without human reprogramming. In sales, an intelligent assistant can tap into CRM records, ERP data, and product documentation to assemble answers tailored to the specific deal, the person on the other end, and the nuances of the ongoing conversation.

These aren’t theoretical use cases—they’re examples of what becomes possible when context is treated as a first-class engineering concern. The intelligence lies not just in the model’s ability to generate text, but in the system’s ability to remember, reason, and adjust.

Overcoming Common Context Engineering Challenges

With this shift comes a new set of engineering challenges—ones that differ fundamentally from those faced in traditional AI deployments.

One of the most critical hurdles is persistent memory. AI agents must not only remember what’s happened in the past, but also explain why they made the decisions they did. This becomes essential in industries where auditability, compliance, and trust are non-negotiable. Without traceability, intelligent systems quickly become unmanageable and opaque.

Data fragmentation is another significant barrier. In most enterprises, context lives in dozens of different systems, formats, and silos. Making that context available to AI agents means solving for more than just data access—it means designing for integration, security, and semantic consistency at scale.

Scalability presents its own challenge. The needs of a customer service rep in North America might differ greatly from one in Southeast Asia. Regulatory contexts, language nuances, and product differences must all be accounted for. Context engineering is what allows systems to adapt without needing to be rebuilt for every variation.

And of course, there’s governance. As agents become more autonomous and capable, enterprises need mechanisms to ensure they’re operating within boundaries. Guardrails must be in place not only to prevent hallucinations, but to enforce business rules, protect sensitive data, and align with organizational policy.

None of this is trivial—but it is possible. The key is a platform architecture that treats context not as an add-on, but as the foundation. One that supports traceability, integration, adaptability, and governance as first principles. With this, context engineering becomes not only achievable—but indispensable to any enterprise looking to operationalize AI responsibly at scale.

Why Context Engineering Matters Now

The rise of context engineering signals a maturation in AI development. As we move beyond basic prompt optimization, we’re empowering AI to operate more like human thinkers—drawing on accumulated knowledge, adapting to new information, and collaborating effectively.

This is particularly vital in fields like customer service, where Kore.ai’s context-aware bots can maintain conversation history and personalize responses, leading to higher satisfaction and efficiency.

In summary, while prompt engineering laid the groundwork, context engineering builds the full structure. It’s not just about better questions; it’s about creating smarter ecosystems.

For AI practitioners, embracing context engineering for agents means designing systems that are resilient, intelligent, and ready for the complexities of tomorrow’s complex and evolving landscape. If you’re exploring agentic AI solutions, consider how context engineering can elevate your projects—starting with innovative platforms like the Kore.ai Agent Platform.