Can an open source MoE truly power agentic coding workflows at a fraction of flagship model costs while sustaining long-horizon tool use across MCP, shell, browser, retrieval, and code? MiniMax team has just released MiniMax-M2, a mixture of experts MoE model optimized for coding and agent workflows. The weights are published on Hugging Face under the MIT license, and the model is positioned as for end to end tool use, multi file editing, and long horizon plans, It lists 229B total parameters with about 10B active per token, which keeps memory and latency in check during agent loops.

Architecture and why activation size matters?

MiniMax-M2 is a compact MoE that routes to about 10B active parameters per token. The smaller activations reduce memory pressure and tail latency in plan, act, and verify loops, and allow more concurrent runs in CI, browse, and retrieval chains. This is the performance budget that enables the speed and cost claims relative to dense models of similar quality.

MiniMax-M2 is an interleaved thinking model. The research team wrapped internal reasoning in

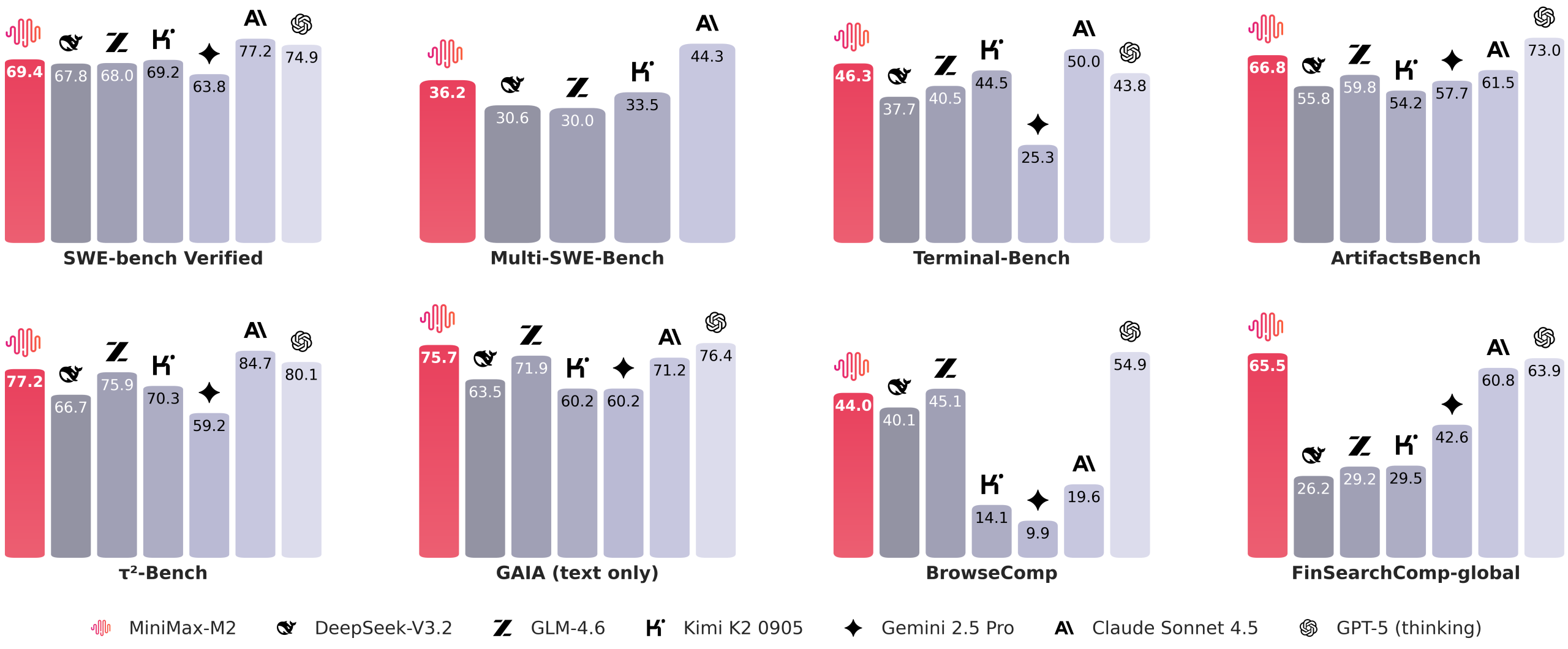

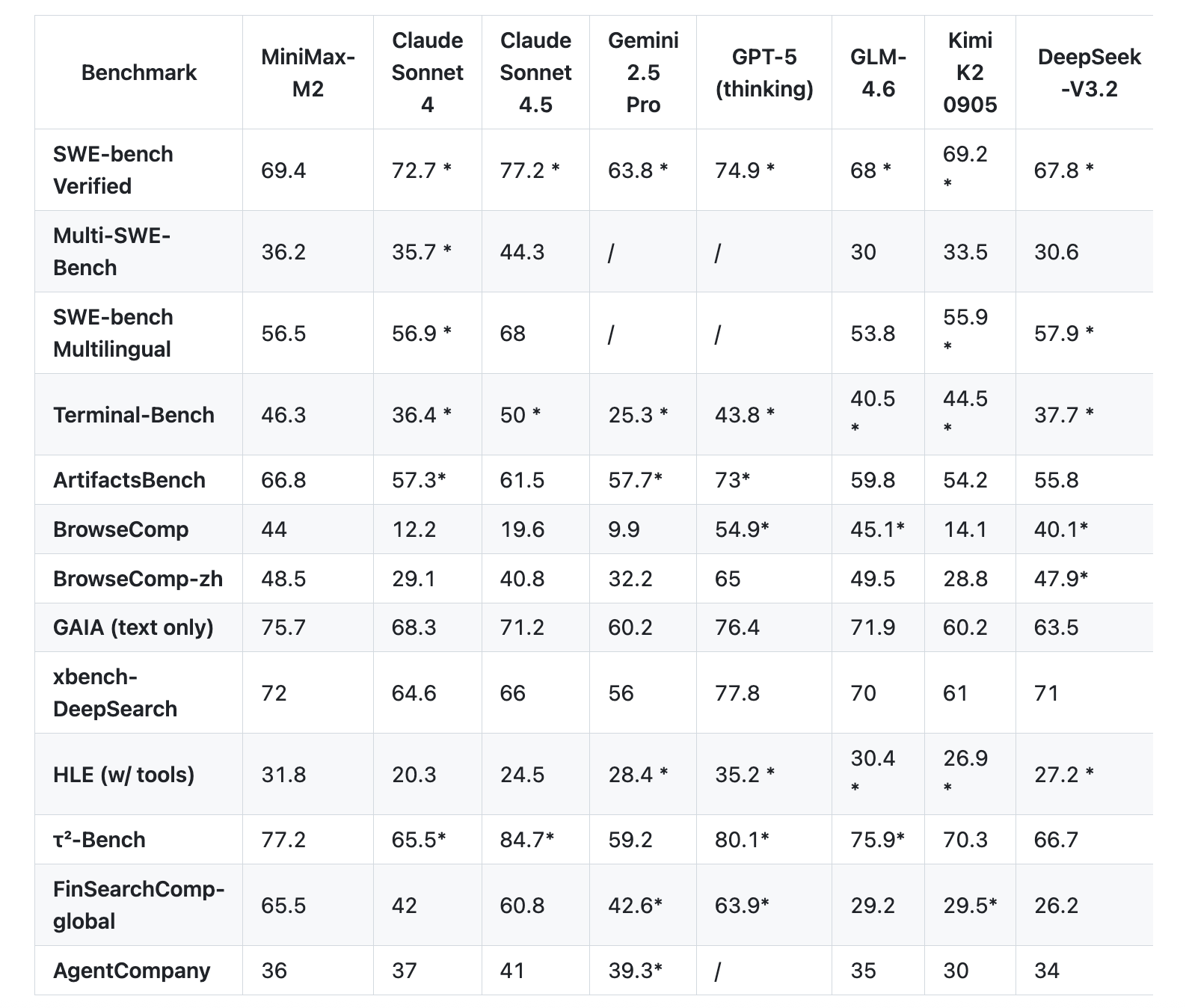

Benchmarks that target coding and agents

The MiniMax team reports a set of agent and code evaluations are closer to developer workflows than static QA. On Terminal Bench, the table shows 46.3. On Multi SWE Bench, it shows 36.2. On BrowseComp, it shows 44.0. SWE Bench Verified is listed at 69.4 with the scaffold detail, OpenHands with 128k context and 100 steps.

MiniMax’s official announcement stresses 8% of Claude Sonnet pricing, and near 2x speed, plus a free access window. The same note provides the specific token prices and the trial deadline.

Comparison M1 vs M2

| Aspect | MiniMax M1 | MiniMax M2 |

|---|---|---|

| Total parameters | 456B total | 229B in model card metadata, model card text says 230B total |

| Active parameters per token | 45.9B active | 10B active |

| Core design | Hybrid Mixture of Experts with Lightning Attention | Sparse Mixture of Experts targeting coding and agent workflows |

| Thinking format | Thinking budget variants 40k and 80k in RL training, no think tag protocol required | Interleaved thinking with |

| Benchmarks highlighted | AIME, LiveCodeBench, SWE-bench Verified, TAU-bench, long context MRCR, MMLU-Pro | Terminal-Bench, Multi SWE-Bench, SWE-bench Verified, BrowseComp, GAIA text only, Artificial Analysis intelligence suite |

| Inference defaults | temperature 1.0, top p 0.95 | model card shows temperature 1.0, top p 0.95, top k 40, launch page shows top k 20 |

| Serving guidance | vLLM recommended, Transformers path also documented | vLLM and SGLang recommended, tool calling guide provided |

| Primary focus | Long context reasoning, efficient scaling of test time compute, CISPO reinforcement learning | Agent and code native workflows across shell, browser, retrieval, and code runners |

Key Takeaways

- M2 ships as open weights on Hugging Face under MIT, with safetensors in F32, BF16, and FP8 F8_E4M3.

- The model is a compact MoE with 229B total parameters and ~10B active per token, which the card ties to lower memory use and steadier tail latency in plan, act, verify loops typical of agents.

- Outputs wrap internal reasoning in

... - Reported results cover Terminal-Bench, (Multi-)SWE-Bench, BrowseComp, and others, with scaffold notes for reproducibility, and day-0 serving is documented for SGLang and vLLM with concrete deploy guides.

Editorial Notes

MiniMax M2 lands with open weights under MIT, a mixture of experts design with 229B total parameters and about 10B activated per token, which targets agent loops and coding tasks with lower memory and steadier latency. It ships on Hugging Face in safetensors with FP32, BF16, and FP8 formats, and provides deployment notes plus a chat template. The API documents Anthropic compatible endpoints and lists pricing with a limited free window for evaluation. vLLM and SGLang recipes are available for local serving and benchmarking. Overall, MiniMax M2 is a very solid open release.

Check out the API Doc, Weights and Repo. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Michal Sutter is a data science professional with a Master of Science in Data Science from the University of Padova. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex datasets into actionable insights.