Building Ethical and Transparent AI Frameworks

Artificial intelligence shapes decisions in business, healthcare and government and we need to be building ethical and transparent AI frameworks. People worry about bias, opacity and data misuse. Rapid AI deployment without ethical planning can cause reputational harm and legal issues. Responsible AI governance ensures innovation benefits society and preserves fairness.

Ethical and responsible AI

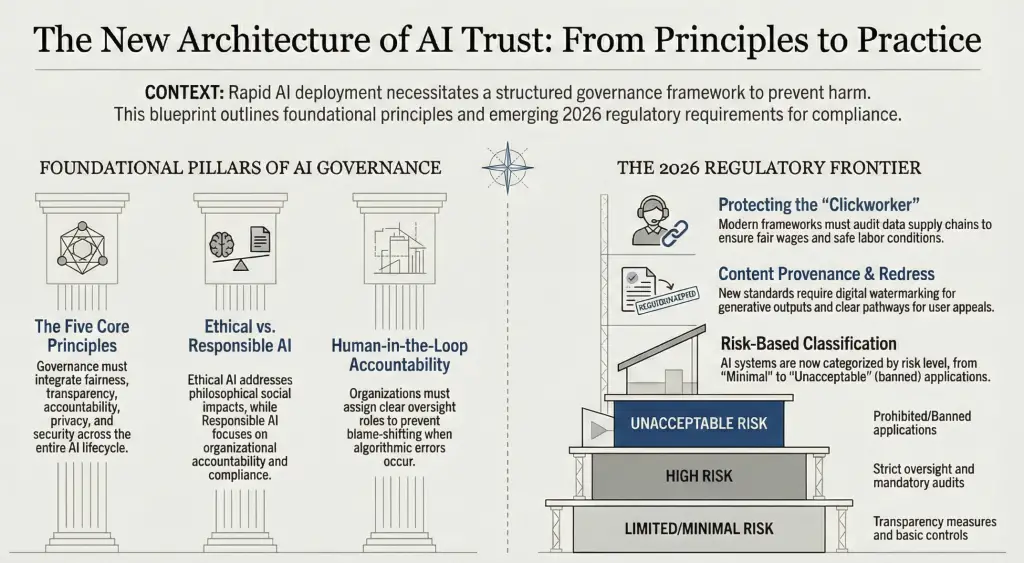

Ethical AI and responsible AI overlap but focus on different ideas. Ethical AI addresses philosophical questions about fairness, justice and social impact. It uses principles to examine how AI changes society. Responsible AI relates to how organisations deploy AI. It focuses on accountability, transparency and compliance with laws. A hospital using AI for diagnostics needs to monitor algorithms to ensure fair treatment and explain operations to regulators and patients.

Core principles of ethical AI

A strong governance framework uses five principles: fairness, transparency, accountability, privacy and security. Fairness means AI systems must deliver equal outcomes across protected groups. Developers need to set fairness criteria and perform bias audits. Investigations into criminal justice algorithms have shown that even seemingly neutral models can produce discriminatory results if fairness is not defined and tested.

Transparency requires organisations to disclose data inputs and algorithmic logic. Diverse teams should examine training data and algorithms to spot hidden biases and explain decisions clearly. Transparency builds trust by helping people understand why a model makes a recommendation. Accountability ensures that humans remain responsible for outcomes. AI systems cannot take responsibility; organisations must assign oversight roles and define who answers for errors. This prevents blame shifting and encourages careful oversight.

Privacy protects personal data used in training and deploying AI models. Organisations must use encryption, access controls and anonymisation to keep data safe. They also need to comply with data protection laws. Security guards systems against attacks and misuse. Without strong security, attackers could manipulate data or models, undermining reliability and harming users.

Governance frameworks and organisational roles

Principles alone do not guarantee ethical AI. Organisations need structured governance frameworks that unify guidelines, processes and roles across all business units. These frameworks form the basis for risk management, documenting how to identify, mitigate and monitor AI risks. They turn abstract values into practical steps.

A comprehensive framework should define key roles. An AI governance council or ethics board sets strategy, oversees implementation and resolves issues. Data scientists and engineers develop models that follow the framework. Legal and compliance officers ensure alignment with laws. Business owners are accountable for AI in their domains. Data stewards manage data quality and access. Clear accountability ensures that each part of the AI lifecycle has an owner.

Policies and standards must cover the entire AI lifecycle: data collection, model development, validation, deployment, monitoring and retirement. Procedures should include bias mitigation, change management and incident response plans. For instance, an organisation might require regular bias testing and independent audits for models affecting human decisions. Setting these rules helps maintain trust and consistency.

Aligning with global standards

Responsible AI frameworks should align with international guidelines. Laws and principles emphasise fairness, accountability and transparency. They stress human oversight, technical robustness and non‑discrimination. Aligning policies with external standards prepares organisations for evolving regulations.

Emerging gaps and updates for 2026

New challenges have surfaced in 2025 and 2026 that most governance frameworks overlook. These gaps require specific attention to ensure ethical AI deployment.

Human labor and labor rights

AI models rely on large volumes of labelled data provided by human workers. Many of these “clickworkers” operate in low‑income regions and face exploitation. Ethical AI governance must include labor rights. Organisations should audit data supply chains, ensure fair wages and safe working conditions, and avoid using data labelled through forced labour. Adding a “Labor Rights” clause to supply chain policies helps protect the people behind your AI.

Risk‑based classification of AI systems

Not all AI systems pose the same risks. Global regulations, such as the European Union AI Act, classify AI applications into four tiers: Unacceptable Risk, High Risk, Limited Risk and Minimal Risk. Unacceptable applications are banned, while high‑risk systems require strict oversight. Limited risk systems must include transparency measures, and minimal risk systems need few controls. Naming the tiers in your policies ensures teams apply the appropriate requirements based on the project’s risk level.

Content provenance and output validation

Generative AI can produce hallucinations or misleading content. Legal standards now require “hallucination management” and “watermarking” for generative models. Governance frameworks should include output validation to check generated content against trusted data. Watermarking embeds hidden markers in outputs to track provenance and discourage misuse. These measures strengthen security and transparency.

Liability and redress for AI decisions

AI governance must address what happens when systems fail. Individuals affected by an AI decision need a clear pathway to appeal and seek remedy. A “Right to Redress” section defines how users can challenge decisions and obtain human review. Including a dedicated appeals process ensures accountability and protects users from harm.

Implementation roadmap and detailed plan

To put responsible AI into practice, follow a structured plan:

- Identify all AI systems used in your organisation. Document their purposes and impact.

- Evaluate data sources for each system. Note data sensitivity and ownership.

- Assess risks by analysing potential biases, privacy issues and compliance gaps.

- Set core principles based on fairness, transparency, accountability, privacy and security.

- Create a governance council with leaders from IT, compliance, legal, ethics and business units.

- Define roles and responsibilities for developing, approving, deploying and monitoring AI systems.

- Assemble an ethics board with external advisors or experts to review high‑impact projects.

- Draft data management policies covering collection, storage and anonymisation.

- Establish model development standards requiring fairness assessments, bias checks and explainability.

- Create documentation templates for training data sources, model features and validation results.

- Design an incident response plan for handling model failures or ethical breaches.

- Develop a model registry that tracks models, owners, deployment status and performance metrics.

- Integrate governance checkpoints into project workflows from design through deployment.

- Involve multidisciplinary teams including ethicists and legal experts in design reviews.

- Implement transparency measures by providing clear explanations for AI decisions and user‑facing documentation.

- Schedule regular audits to review compliance, fairness metrics and operational performance.

- Monitor models continuously using metrics to detect drift, bias or anomalies.

- Retrain or retire models if they fail to meet performance or ethical standards.

- Educate staff on ethical AI principles, risks and compliance responsibilities.

- Promote a culture of responsibility by encouraging reporting of issues without fear of retaliation.

- Align policies with evolving global regulations and industry guidelines.

- Participate in industry forums to stay informed about best practices and regulatory updates.

- Review the framework regularly and adjust based on feedback and changing requirements.

- Measure outcomes to determine whether governance reduces risk and increases trust.

- Refine policies and tools based on lessons learned and technological advances.

- Assess labour conditions in your data supply chain. Confirm that data annotators receive fair wages and safe working conditions.

- Assign risk tiers for each project: Unacceptable, High, Limited or Minimal. Apply policies based on the tier.

- Validate generative outputs through automated checks. Add watermarking and hallucination detection to ensure integrity.

- Create an appeals process for individuals harmed by AI decisions. Provide a clear path for redress.

Conclusion

AI offers powerful opportunities across many sectors, but unregulated use can cause harm. By applying fairness, transparency, accountability, privacy and security principles and following a structured governance framework, organisations can deploy AI responsibly. Detailed policies, well‑defined roles, regular monitoring and alignment with global standards create a trustworthy AI environment. Responsible AI governance is a necessity for sustainable innovation and public confidence.