Understanding complexity & emergence is more the road to understanding Turing-class entities than is the current focus on Scaling Laws & the Bitter Lesson…

Can machine learning models soon transcend their s***poster roots and approach true Turing-class intelligence? One must be skeptical once one realizes that the reality is not building an AII—an artificial super-intelligence—but rather looking around and recognizing that we are all already embedded in the SSNASI—species-spanning natural anthology super-intelligence—that is the Mind of humanity today.

Across my screen comes a very nice comment on something I wrote:

Spreadsheet, Not Skynet: Microdoses, Not Microprocessors

from the extremely sharp Richard Baker <https://bsky.app/profile/sharp.blue>:

Richard Baker: <https://bsky.app/profile/sharp.blue/post/3lsikmmh63k22>: ‘People have gotten very excitable about interactive fiction generators that are tuned to generate conversations between people and fictional AIs. The most obvious are [those among] the “AI safety” people who think that it’s some kind of dire warning sign that our interactive fiction generators can generate stories about rogue AIs acting along the lines of scenarios that they’ve previously outlined, and which are therefore in the training corpora. It’s completely obvious that fixed functions from sequences of tokens to probabilities of next tokens, no matter how complex, cannot have plans, goals, desires, fears or any other mental attributes, as they have no state within which things can be represented. They can, however, generate output in which people can “see” plans, goals, desires, values and so on, in exactly the same way we can see such things in Frodo or Odysseus. We have no precedent for such systems, and lack good ways to conceptualise them…

Perhaps we can say that they have reflexes—complicated reflexes?

One pathway to think about this and related issues is one that I find myself going down again and again. It is this: Iterated fixed functions are Markov processes, and since they have no internal state different from the external current-visible state of the system, Richard is completely correct.

The problem is that there is an old dodge in this business: Change the definition of the current-visible state to include not just what the visible part of the system is doing now, but also what it has done long in the past. Thus everything that might have affected what the invisible internal state is now is included in the arguments of the fixed function. This is in some sense absurd, in that your state vector for your Markov process is now huge. This dodge turns reflexes into, potentially, plans, goals, desires, values and so on.

Of course, this dodge is profoundly unhelpful to those of us speaking English. We are trying to make fuzzy but important distinctions. This dodge keeps us from doing so, And it keeps us from doing so because it erases the key issues: complexity and emergence.

And whenever I get to this point, my mind once again goes—in a reflex, like a low-order Markov process iterating on a fixed function—to this from the smart Scott Aaronson:

Scott Aaronson: Quantum Computing since Democritus <https://cs.famaf.unc.edu.ar/~hoffmann/md19/democritus.html>: ‘You can… say, that’s not really AI. That’s just massive search, helped along by clever programming. Now, this kind of talk drives AI researchers up a wall. They say: if you told someone in the sixties that in 30 years we’d be able to beat the world grandmaster at chess, and asked if that would count as AI, they’d say, of course it’s AI! But now that we know how to do it, now it’s no longer AI. Now it’s just search….

In the last fifty years, have there been any new insights about the Turing Test itself? In my opinion, no. There has, on the other hand, been a non-insight, which is called Searle’s Chinese Room…. The way it goes is, let’s say you don’t speak Chinese…. You sit in a room, and someone passes you paper slips through a hole in the wall with questions written in Chinese, and you’re able to answer the questions (again in Chinese) just by consulting a rule book. In this case, you might be carrying out an intelligent Chinese conversation, yet by assumption, you don’t understand a word of Chinese! Therefore symbol-manipulation can’t produce understanding…. Duh: you might not understand Chinese, but the rule book does! Or if you like, understanding Chinese is an emergent property of the system consisting of you and the rule book, in the same sense that understanding English is an emergent property of the neurons in your brain.

Like many other thought experiments, the Chinese Room gets its mileage from a deceptive choice of imagery—and more to the point, from ignoring computational complexity. We’re invited to imagine someone pushing around slips of paper with zero understanding or insight—much like the doofus freshmen who write (a+b)2=a2+b2 on their math tests.

But how many slips of paper are we talking about?

How big would the rule book have to be, and how quickly would you have to consult it, to carry out an intelligent Chinese conversation in anything resembling real time? If each page of the rule book corresponded to one neuron… then probably we’d be talking about a “rule book” at least the size of the Earth, its pages searchable by a swarm of robots traveling at close to the speed of light.

When you put it that way, maybe it’s not so hard to imagine that this enormous Chinese-speaking entity—this dian nao 电脑—that we’ve brought into being might have something we’d be prepared to call understanding or insight…

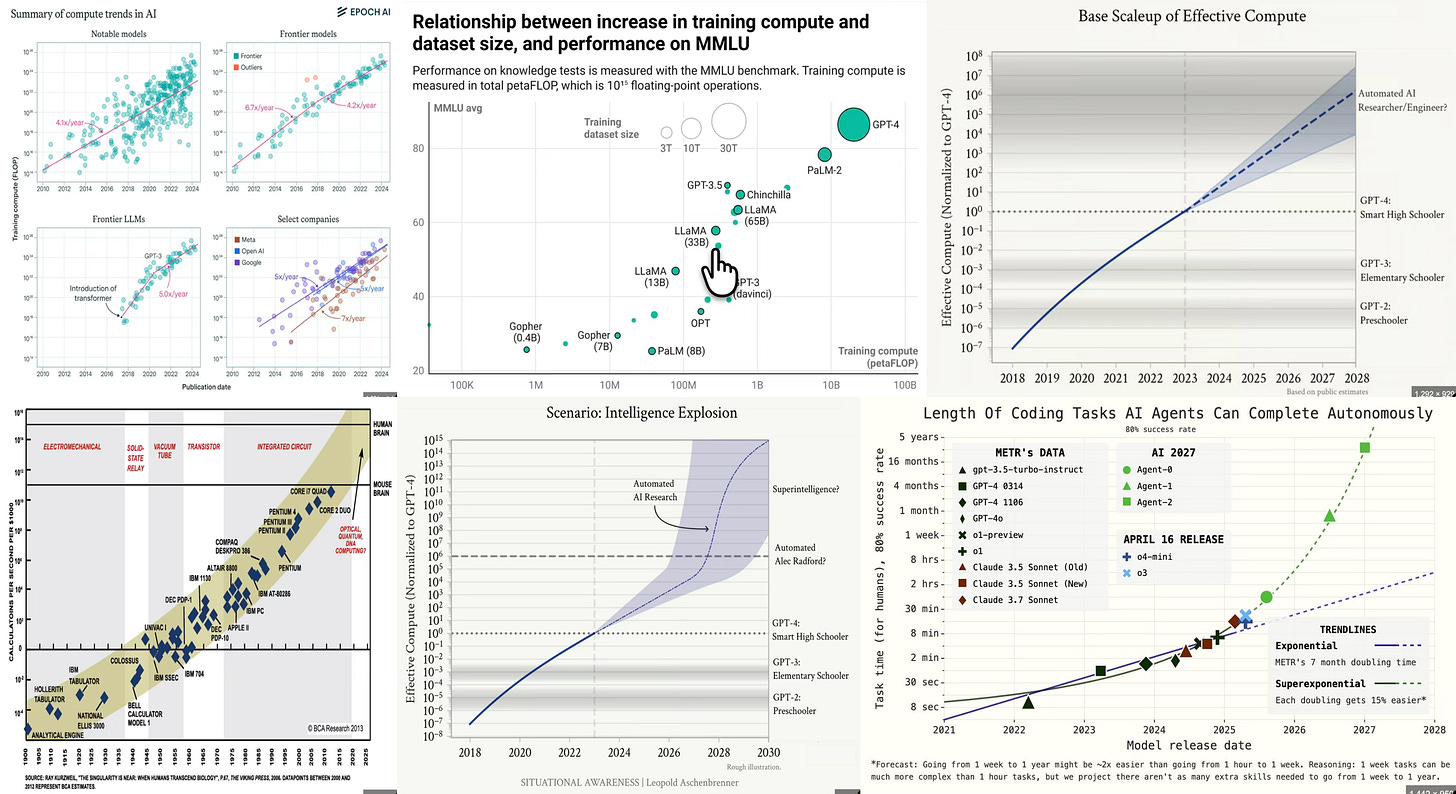

The bull case for the (future) “cognitive” capacities of our modern advanced machine-learning models—MAMLMs—is always made by drawing straight lines on semilog paper and projecting those lines into the future. Or even having them turn further up!

People have been trained to think that drawing straight lines on semilog paper—projecting exponential trends into the indefinite future—is a reasonable thing to do, largely because of the astonishing success of Moore’s Law since 1965. Gordon Moore’s famous observation that the number of transistors on integrated circuits doubled approximately every two years was not merely a curiosity; it was a prophecy that became a self-fulfilling roadmap for the semiconductor industry. For half a century, this exponential trend underwrote the relentless improvement in computing power, fueling everything from the personal computer revolution to the explosion of the internet.

The lesson drilled into the collective consciousness of technologists, economists, and even lay observers was simple: technological progress is not just fast, it is accelerating. And so, when faced with the question of artificial intelligence, it is all too tempting to reach for the same ruler, to assume that what worked for silicon will work for cognition, and that the future will arrive on schedule, riding the smooth upward curve of the exponential.

But this faith in scaling is not just a product of Moore’s Law. It is reinforced by what Richard Sutton has called the Bitter Lesson:

-

in the long run, the most effective AI methods are those that leverage computation and data at scale.

-

Those that encode human insights or hand-craft rules are not so effective.

-

Again and again, brute-force approaches—whether in chess, Go, or language modeling—have outstripped the clever, bespoke methods of earlier generations.

The Bitter Lesson has been, so far, a rebuke to the hubris of human ingenuity: it is not our cleverness, but our willingness to harness massive computational resources, that yields the greatest advances. This lesson, bitter as it may be for those who fancy themselves architects of intelligence, has become dogma in the AI community.

Add to this Feynman’s “room at the bottom” point: the recognition that there is an enormous amount of room for technological progress at ever-smaller scales. In his famous 1959 lecture, Feynman speculated about the possibilities of manipulating individual atoms and molecules, inaugurating the field of nanotechnology. The implication for AI is clear: if we can keep shrinking the building blocks of computation, there is no obvious limit to how much intelligence we might engineer—at least, so the argument goes.

Yet, is it a reasonable thing to do—to draw straight lines on semilog paper and expect the future to conform to our extrapolations?

Here we must stop and get off the trolley.

Exponential trends, as any student of population biology or innovation diffusion will tell you, do not go on forever. They “logisticate”—that is, they follow the logistic curve, which starts exponentially but eventually saturates as it runs into physical, economic, or conceptual limits. Moore’s Law itself is exhausted. The cost and complexity of further miniaturization has mounted too high. The same will be true for “AI” someday. Scaling laws hold up to a point. But eventually, diminishing returns set in, and the curve bends. That someday may have already passed.

And this dodges the truly key issues: complexity and emergence.

Complexity, in this context, refers to the sheer intricacy of the systems we are trying to build or model. Emergence is the phenomenon by which simple rules or components give rise to behaviors or properties that are not obvious from the rules themselves. Both concepts are central to the study of brains and minds—and to the limits of artificial intelligence. The human brain is not just a large neural network; it is a product of billions of years of evolution, shaped by the interplay of genes, environment, and culture. Its complexity is not merely quantitative but qualitative; its emergent properties—consciousness, intentionality, creativity—are not easily reducible to the sum of its parts. Thus, the challenge is not simply one of scale, but of understanding and replicating the conditions under which emergence occurs.

In my view, the natural way to address these questions is through a comparative exercise: to ask how the “size” of the human brain stacks up against the “size” of present and future large language models (LLMs). But “size” here is a slippery notion. Do we mean the number of parameters in a neural network? The number of synapses in a brain? The energy budget? The data throughput? Each metric tells us something, but none captures the whole story. For example, the human brain contains roughly 86 billion neurons and hundreds of trillions of synapses, operating with a power budget of about 20 watts—astonishing efficiency compared to even the most advanced supercomputers. Meanwhile, the largest LLMs boast hundreds of billions, even trillions, of parameters, but their architectures and training regimes are fundamentally different from biological brains. The analogy is tempting, but the differences are profound.

This leads to the first key question: How complex is the human brain, anyway, relative to our models? The answer is, I think, that we are only beginning to grasp the magnitude of the problem. The brain’s complexity is not just a matter of scale, but of organization: its connectivity, its plasticity, its capacity for self-modification. Unlike artificial neural networks, which are typically static once trained, the brain is a living, learning, adapting organ. It is shaped not only by genetics but by experience, by social interaction, by culture. To compare it to a language model is to compare a rainforest to a spreadsheet: both have structure, but one is alive.

If one tries to do a “parameter” count, one gets 10^15 synapses in a human brain, and then raises that by the brain’s dynamic plasticity, real-time learning, and the role of glia and neuromodulators, and the internal complexity of each neuron beyond dendrites in-axon out, you can get up to 10^18 “parameters”. Anthrioic’s Claude is still at 10^11. That suggests as big a difference between our brains and frontier MAMLMs as between today’s frontier MAMLMs and a 200-node four-layer perceptron.

A second key question: How much more than a blank slate can the process of biochemical development from a single cell produce in a brain? Here, the answer is: quite a lot. The process of neurodevelopment is a marvel of self-organization, guided by genetic instructions but profoundly influenced by environmental inputs. From a single fertilized egg, a human embryo orchestrates the division, migration, and differentiation of billions of cells, weaving them into the intricate tapestry of the nervous system. The end result is a brain that is both pre-programmed and plastic, capable of learning languages, playing chess, composing music, and inventing calculus. The blank slate is a myth; the brain is born ready to learn, but it is also endowed with predispositions, biases, and instincts.

A third key question: How close to that limit has evolution trained our process of biochemical development to operate? Evolution is a relentless optimizer, but it is not an engineer; it works with what is available, subject to constraints of time, energy, and reproductive fitness. The human brain is the product of millions of years of tinkering, but it is not perfect. It is, however, astonishingly effective—we think. It balances speed, efficiency, and flexibility in ways that our artificial systems can only envy. Whether we are close to the theoretical limit of what is possible is an open question, but it is clear that evolution has pushed the envelope further than any human designer has yet managed.

Moreover, we are, in a sense, already distributed-computing anthology-mind superintelligences. Humanity’s collective intelligence is not just the sum of individual brains, but the product of communication, collaboration, and cultural accumulation. Humanity is already superintelligence, an anthology superintelligence: a vast, distributed network of minds, technologies, and institutions, each building on the work of others.

I am truly a Turing-class entity.

If I, truly a Turing-class entity, truly working on my own, wanted to use my cognitive skills, my sensors, and my manipulators to open and gain energy to support my functioning from one of the walnuts growing on the tree ten yards to my left, I would have quite a difficult time doing that.

But I can do it easily. I can do it easily because I am backed up by the anthology superintelligence that is humanity. That SSNASI—species-spanning natural anthology superintelligence—backs me up by telling me that:

-

there are things labeled “nutcrackers”,

-

they can be made out of steel, a

-

it has in fact made many such, and

-

the closest nutcracker is stored in the top-left kitchen cabinet drawer.

In this light, the quest to build artificial superintelligence is puzzling: we want tools for thought to empower and extend that SSNASI we have, not to build an ASI that Nilay Patel can then worship as a god. We are looking for the extension of human capabilities through tools, language, and shared knowledge, not for some bizarro magic-mushroom driven hallucinatory remake of “Frankenstein”.

And what are we, on our current trajectory, actually building with our MAMLMs anyway? Are we honing in on better and better approximations of internet s***posters—systems that can mimic the style and content of online discourse, but without understanding or intentionality—or are we building something else? The distinction matters. Pretraining, the process by which LLMs are exposed to vast corpora of text, produces models that are adept at imitation, but not necessarily at reasoning or goal-directed behavior. Reinforcement Learning from Human Feedback (RLHF) seeks to bridge this gap, aligning model outputs with human preferences and values. But the question remains: are we creating minds, or merely mirrors?

Also crossing my screen this morning is this from Andrej Karpathy:

Andrej Karpathy: <https://x.com/karpathy/status/1937902205765607626/>: ‘+1 for “context engineering” over “prompt engineering”. People associate prompts with short task descriptions you’d give an LLM in your day-to-day use.

When in every industrial-strength LLM app, context engineering is the delicate art and science of filling the context window with just the right information for the next step.

Science, because doing this right involves task descriptions and explanations, few shot examples, RAG, related (possibly multimodal) data, tools, state and history, compacting… Too little or of the wrong form and the LLM doesn’t have the right context for optimal performance. Too much or too irrelevant and the LLM costs might go up and performance might come down. Doing this well is highly non-trivial.

And art because of the guiding intuition around LLM psychology of people spirits. On top of context engineering itself, an LLM app has to:

– break up problems just right into control flows

– pack the context windows just right

– dispatch calls to LLMs of the right kind and capability

– handle generation-verification UIUX flows

– a lot more—guardrails, security, evals, parallelism, prefetching,,..So context engineering is just one small piece of an emerging thick layer of non-trivial software that coordinates individual LLM calls (and a lot more) into full LLM apps. The term “ChatGPT wrapper” is tired and really, really wrong…

This is a task that is a whole different animal from simply throwing more nd more data at ever-larger and larger blank-slate neural network, and hoping that the Bitter Lesson and scaling laws will carry us through. Andrej Karpathy thus appears reality-grounded, in a sense that many of his peers (especially when they are trying to raise money from overgullible venture capitalists) are not. He undertands that the core models are better and better approximations to internet s***posters, and seeks effective ways of noooodging them out of their natural s***poster nature into doing useful workk.

Above all, as I survey this terrain, I find myself becoming more and more certain of this: The key issues truly are complexity and emergence. And here, not surprisingly, the key conceptual problem is that complexity and emergence are not so much ideas as labels for problems that we cannot solve because we do not yet have real ideas to fill empty boxes.

But until we have ideas to genuinely fill the boxes, I have to believe that anything we could call a Turing-class silicon software entity is, well, as far from our frontier MAMLMs as they are from the Mark I Perceptron Frank Rosenblatt demonstrated at work back in 1960.

-

Aaronson, Scott. 2013. Quantum Computing since Democritus. Cambridge: Cambridge University Press. https://cs.famaf.unc.edu.ar/~hoffmann/md19/democritus.html

-

Baker, Richard. 2025. “People have gotten very excitable…” Bluesky, June 26, 2025. https://bsky.app/profile/sharp.blue/post/3lsikmmh63k22

-

Biro, Gabor. 2024. “Our Brain’s 86 Billion Neurons: Can LLMs Surpass Them?” Birow, December 22.

https://www.birow.com/agyunk-86-milliard-neuron-llm -

DeLong, J. Bradford. 2025. “Spreadsheet, Not Skynet: Microdoses, Not Microprocessors.” Grasping Reality, June 24, 2025. https://braddelong.substack.com/p/spreadsheet-not-skynet-microdoses

-

DeLong, J. Bradford. 2022–25. SubTuringBradBot Grasping Reality Subnewsletter. Accessed June 29, 2025. https://braddelong.substack.com/s/subturingbradbot

-

DeLong, J. Bradford. 2024. “What the East African Plains Ape Anthology Intelligence Has Managed to Do, with Respect to the Economy.” Grasping Reality, December 7, 2024.

https://braddelong.substack.com/p/what-the-east-african-plains-ape -

DeLong, J. Bradford. 2018. “Humans as an Anthology Intelligence.” Grasping Reality, August 31, 2018.

https://www.bradford-delong.com/2018/08/humans-as-an-anthology-intelligence.html -

DeLong, J. Bradford, & A. Michael Froomkin. 2000. “Speculative Microeconomics for Tomorrow’s Economy.” In Internet Publishing and Beyond: The Economics of Digital Information & Intellectual Property, edited by Brian Kahin and Hal Varian, 97–137. Cambridge, MA: MIT Press.

http://osaka.law.miami.edu/~froomkin/articles/spec.htm -

Feynman, Richard P. 1960. “There’s Plenty of Room at the Bottom.” Engineering and Science 23 (5): 22–36. (Originally presented at the annual meeting of the American Physical Society, Pasadena, California, December 29, 1959.)

https://calteches.library.caltech.edu/47/2/1960Bottom.pdf -

Patel, Nilay. 2025. “They think they are building god.” The Verge, accessed June 29, 2025. [URL needed for precise citation]

-

Karpathy, Andrej. 2025. “+1 for ‘Context Engineering’…” Twitter, June 25, 230025. https://x.com/karpathy/status/1937902205765607626

-

Lefkowitz, Melanie. 2019. “Professor’s perceptron paved the way for AI – 60 years too soon.” Cornell Chronicle, September 25, 2019.

https://news.cornell.edu/stories/2019/09/professors-perceptron-paved-way-ai-60-years-too-soon -

Millidge, Beren. 2022. “The Scale of the Brain vs Machine Learning.” Beren’s Blog, August 6, 2022.

https://www.beren.io/2022-08-06-The-scale-of-the-brain-vs-machine-learning/ -

Moore, Gordon E. 1965. “Cramming More Components onto Integrated Circuits.” Electronics 38 (8): 114–117.

https://en.wikipedia.org/wiki/Moore%27s_law -

Patel, Nilay. 2024. “Why AI companies are dropping the doomerism”. Decoder, October 24, 2024. The Verge. https://www.theverge.com/24278413/ai-manifesto-anthropic-dario-amodei-agi-digital-god-openai-sam-altman-decoder-podcast

-

Sutton, Richard S. 2019. “The Bitter Lesson.” March 13, 2019.

http://www.cs.utexas.edu/~sutton/papers/bitter-lesson.html -

Willison, Simon. 2025. “Context Engineering.” June 27, 2025.

https://simonwillison.net/2025/Jun/27/context-engineering/

If reading this gets you Value Above Replacement, then become a free subscriber to this newsletter. And forward it! And if your VAR from this newsletter is in the three digits or more each year, please become a paid subscriber! I am trying to make you readers—and myself—smarter. Please tell me if I succeed, or how I fail…

#reflexes-vs-desires-&-actions